Universal Accessibility

Research

Universal Accessibility focuses on

serving

the widest possible set of users.

USER Lab is especially concerned with developing methods to analyze,

design, and evaluate systems to improve their universal accessibility.

USER Lab has developed a series of reference models for Accessible

Systems

and for Assistive Technologies.

This page includes information on:

The UA project team includes:

- Jim Carter,

- International convenor(chair) and expert member of

ISO/IEC/JTC1/SC35/WG6 User Interface Accessibility

- Canadian expert member of ISO TC159((SC4/WG 5,WG6), (WG2)),

ISO/IEC/JTC1SWG Accessibility

- Lisa Tang

- Accessibility engineering graduate student

- Tanya Lung

- Accessibility engineering graduate student

- Jared Boyko

- Accessibility research assistant

- David Fourney, Associate Member

- Accessibility advocate,

- Canadian expert member of ISO TC 159/SC 4/WG 5, ISO/IEC

JTC1/SC35/WG6

Some major UA insights from our team

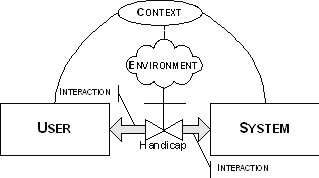

The Universal Access Reference Model (UARM), as illustrated in

Figure

1, concentrates on the interactions between a user and a system.

Handicaps

are anything that may interfere with the accessibility of interactions

between

users and systems. A handicap may have one or many sources among the

system,

user, interaction, and/or environment. This model is blame-free, since

overcoming

any handicap is more important than attributing blame to the source of

the

handicap.

Figure 1.Universal Access Reference Model (UARM)

The figure uses a pipe metaphor to illustrate the flow of

interactions

between the user and the system and a valve metaphor within the pipe to

illustrate various levels of handicaps. A fully open valve represents

the

absence of a handicap. A fully closed valve represents being fully

handicapped.

Any other setting of the valve represents being partially handicapped.

The

environment and any context that is shared between the user and the

system

can effect the flow through the valve in positive and/or negative

manners.

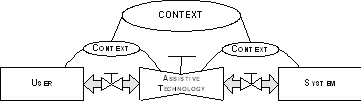

Essentially, an AT is a means of opening the valve. ATs reduce

handicaps.

While a consumer of an AT may not have a disability, there is some

component

of the interaction that is handicapping them. For example, one could

attend

a lecture where the speaker uses a language unknown to the listener.

Since

most people know at least one language, the listener may eventually

come

to know the language the presentation is given in, but is currently

handicapped

by not knowing the language at the present time. The listeners task of

following

the details of the presentation would not be possible without the use

of

a translator to bridge the interaction between the listener and the

speaker.

In this sense, the translator would be an AT.

Assistive technologies function to open the valve between systems

and

users, as illustrated in Figure 2. An AT serves as a proxy within the

interaction

between the user and the system by providing contexts compatible to

each

of the user and the system to perform the translation of specific media

types/channels in each direction. For this reason, AT is not shaped as

a

box, but as a modified valve. It is hoped the AT becomes an invisible

partner

within the interaction.

Figure 2. Positioning Assistive Technology in the UARM

Our UARM is being used in:

ISO IS 9241-20 Ergonomics of human system interaction-

Accessibility

guideline for information communication equipment and services

General

Guidelines as a basis for organizaing high level ergonomic

guidance

for computer (hardware and software) systems.

ISO 9241-171 Guidance on Software Accessibility in order to

expand

the set of guidance beyond that from ISO TS 16071.

ISO/IEC 24756 Framework for specifying a common access profile

(CAP) of needs and capabilities of users, systems, and their

environments which expands upon the Universal Access

Reference

Model to provide for as a basis for describing and evaluating the

accessibility

needs and issues of users, systems, and environments.

Current Research Directions: Creating Alternative Text

Although

a picture is worth a thousand words, what might those thousand words

be? Currently, on most web pages, alternative text (alt-text) for

images does not exist. Even when it is available, the description is

often uninformative and limited. This is the result of telling

developers that they need to create alt-text without giving them

sufficient guidance (or even any guidance) on what it should contain.

It is no wonder alt-text is so poorly done. This project involves

efforts to guide developers to create good alt-text.

Guidance on Creating Alternative Text

While

guidance exists on the technical methods for providing alt-text (such

as the alt and longdesc attributes of the HTML img tag) [2,8], there is

no such guidance on what to include as alt-text. As a result,

developers do not know what to write. Thus, poor and uninformative

descriptions often results. Before developers can write informative

alt-text, they need to know what information is present in the image.

We researched the various recommendations on how images can be describe

within a variety of domains, including: accessibility, information

sciences, and information technology. While many recommendations only

deal with particular types of images, we recognized the need for a

single comprehensive method that couls be applied to all types of

images.

Our research led to the creation of “Guidance on

creating alternative-text for images” that was submitted by the

Standards Council of Canada to ISO/IEC JTC1 Information Technology /

SC35 User Interfaces as the basis of a new standards project.

Tool forHelping Developers Create Alternative Text

We

are currently prototyping a tool that helps developers to apply the

“Guidance on creating alternative-text for images” . We expect that a

public distribution of the tool will be available in Spring 2011.

Current Research Directions: Tools to expand accessiblity

While text is relatively easy to shift modalities (presenting it

visually on displays, auditorially via voice synthesis, or tactilly via

Braille or other tactile symbols) pictures, diagrams, and other

multi-dimensional spaces are still to be made universally accessible.

USERLab currently has a a variety of research projects exploring the

various dimensions of this complex research area, including:

Developing Accessible Development Tools

There is a lack of accessible software development tools tools to

support visually impaired computer professionals. (This is yet

another case of "the shoemaker's children have no shoes.")

Most Software Engineering and Database design tools are highly visual

and graphic. They are generally built with only sighted users in mind

and do not provide information needed to internally visualize the

database being created. This project is investigating

requirements and techniques for making graphical development tools

accessible, regardless of visual capabilities.

Tanya Lung is currently researching the use of auditory and

tactile/haptic interactions in making software development tools

accessibile. This research is an outgrowth of her CMPT 840 project tht

developed DBVisAssist to assist a non-sighted student who was taking a

database course where she was the tutor.

DBVisAssist is a tool which enables auditory-visual diagramming of

Entity-Relationships(ER). DBVisAssist presents tables,

attributes, and relationships to both sighted and non-sighted users in

an understandable and accessible format. Users are able to create

and modify a diagram as well as navigate through a database structure

regardless of their sight capabilities.

With such a tool, visually impaired users can more easily design

databases and understand the database designs by others providing

benefits previously shared by only sighted designers.

Finding Your Way on to a Location That Involves Complex Directions

Locating a classroom for the first time in a large university can be a

major challenge even for fully sigted students. There are often too

many twists, turns, and distances to remember and use along the way.

This poses a significant cognitive load for many people. This problem

is all the more crucial for non-sighted students.

At the same time, most people can learn and recognize the many twists

and turns in a peice of music. This is because of their ability to work

with and internalize rythms. Rythms also apply to navigation. Consider

how most people can get up in the middle of the night and without the

use of lights navigate from their bed either to the toilet or the

refrigerator. While not as complex as finding one's way to most

university classrooms, this can still invovle a number of twists,

turns, and different distances and is often done without having to

consciously think about doing it.

This project is investigating the use of rythms in learning complex

sets of directions for walking to classrooms in a university. It

recognizes that while GPS (global positioning systems) can aid in

navigtion in public spaces, that they are a long way from aiding in

navigating the cooridors of higher learning. This is at least in part

due to the natrue of university buildings where many different

doors and corridors are in very close proximity to each other and where

the characteristics of the buidlings can inhibit getting an accurate

positioning with current technology. This research is also intended to

give the user a sense of how to navigate an unfamiliar space prior to

the first encounter with it.

Jared Boyko is currently researching the use of wii (TM) controlers to

teach people the rythms of how to navigate from one location to another

within a university.

Previous Research

Research into Comparing Capabilities

David

Fourney developed the Common Access Profile (now standardized in

ISO?IEC 24756) to aid in identifying handicaps to accessibility and to

help in identifying assistive technologies that can overcome these

handicaps.

Research into Describing Pictures

Pictures only say a thousand words if you can see them and understand

what you see. With some pictures (including would be illustrative

diagrams in textbooks) seeing is not enough to ensure

understanding. Databases of pictures, even in major art

galleries, tend to have very little data describing the actual pictures

and even less that can be used to support finding pictures that meet a

various user determined sets of criteria. Despite advances in computer

vision, it is not yet feasible

for computers to understand the content and significance of pictures in

a way that can support users. The only way to practically find all the

pictures in a database that meet some visual or conceptual criteria is

to have a sighted person who understands what to look for spend the

time and look at every one of them. While humans are often the most

effective form of assistive technolgy, replying on human assistants to

perform such a task whenever and wherever it occurs is seldome feasible

or desirable.

Understanding the contents and significance of pictures presents

accessibility issues for more than just the visually impaired. While

Alt-text provides a technique for making information on pictures

accessible in Web and other technologies, the problem is having good

information to access. Developers faced with the requirement to provide

Alt-text often just copy the caption of a picture into the Alt-text

field, thus defeating the purpose of requiring Alt-text in the first

place. Automatic Web accssibility evaluators are not able to determine

whether any Alt-text is meaningful or not.

There is a need for developing an approach to describing the contents

of pictures in a meaningful way, that is accessible to both users and

developers. This project is investigating various aspects of this

problem, including:

- describing meaningful objects within a picture, both in terms of

physical object type and attributes and in terms of their semantic

meaning

- describing spatial and logical relations between objects within a

person

- allowing users to obtrain information about a picture and to

navigate between objects within a picture

- aiding developers to use these concepts to develop meaningful

Alt-text

Mr. Alan Wolinski investigated database design for describing

objects and their relationships within a picture. Mr. John Ylioja

investigated methods to support developers in generating textual

representations of imiges. Various other past and current projects in

CMPT 480 / 840 have also contributed to this research area.

Ms. Lisa Tang is now conducting advanced research in this area with

an aim to developing both and ISO/IEC technical report providing

guidance and a software tool to help apply the guidance.

Select UA publications by our team

members

include:

L. Tang and J. Carter, Alternative Text for Images on the Web, paper proposal for CSUN 2011.

J. Carter and L. Tang, User interface component accessibility — Guidance on creating alternative text for images, submitted to ISO/IEC JTC1/SC35/WG6 by the Canadian Advisory Committee on User Interfaces, of the Standards Council of Canada.

D. Fourney and J. Carter, 2008. The Ongoing Evolution of

Standards to Meet the Needs of the Deaf and Hard of Hearing,

Proceedings of the 2008 Annual Meeting of the Human Factors Society,

New York, NY, USA, pp. 561-565

L. Tang, D. Fourney, F. Huang, and J. Carter, 2008. Secondary Encoding,

Proceedings of the 2008 Annual Meeting of the Human Factors Society,

New York, NY, USA, pp 566-570.

J. Carter and D. Fourney, 2007, Techniques

to Assist in Developing

Accessibility Engineers, Assets'07, Tempe, AZ

D. Fourney and J. Carter, 2007,

I Want My Money! Tactile Access To Automated Banking Machines,

Proceedings of Workshop on Tactile and Haptic Interaction, Tokyo, Japan

D. W. Fourney and J. A. Carter,

2006, Ergonomic Accessibility

Standards, Proceedings of 16th

Interantional Conference on Ergonomics, International Ergonomics

Association, Maastricht, The Netherlands.

D. Fourney and J. Carter, 2006. A

standard method of profiling the

accessibility needs of computer users with vision and hearing

impairments, Proceedings of CVHI 2006 Conference and Workshop

on Assistive Technologies for Vision and Hearing Impairment,

EURO-ASSIST-VHI-4, Kufstein, Austria, July, 6 pages.

D. Fourney and J. Carter, 2006. A

standard method of profiling

accessibility needs (using the CAP), Proceedings Conference on

e-Society 2006, International Association for Development of the

Information Society, Dublin, Ireland, Vol. II, pp. 138-142.

J. Carter, 2006. Temporal

issues regarding

accessibility, Proceedings of the Workshop on Accessible Media,

University of Potsdam, Potsdam, Germany

D. W. Fourney and J. A. Carter, 2006, Ergonomic

Accessibility Standards, Proceedings of 16th Interantional Conference

on Ergonomics, International Ergonomics Association, Maastricht, The

Netherlands.

D. Fourney and J. Carter, 2006, The

Future

Looks Bright:

International Standsrds for Accessible

Software, Proceedings of the CSUN 2006 Technology and Persons with

Disabilities Conference, Los Angeles, CA.

J. Carter and D. Fourney, 2005, A Common Accessibility Profile for

Selecting and Using Assistive Technologies, manuscript in production.

J. Carter and D. Fourney, 2003, Using an Universal Access Reference

Model to Identify Further Guidance that Belongs in ISO 16071, Universal

Access

in the Information Society, 31(1) 17-29.

J. Carter and D. Fourney, 2003, The Canadian Position Regarding

the Evolution of ISO TS 16071 Towards Becoming and International

Standard, ISO TC159/SC4/WG5 document N0731.

Classes we offer relating to UA

Please note: This page does not use fancy frames or tables and all

graphics

are fully explained in order to increase accessibility by individuals

with

special needs.